Combining Oversampling and Undersampling for Imbalanced Classification? SMOTE-Tomek and SMOTE-ENN?

Oversampling and undersampling have some flaws. So, to handle highly imbalanced datasets, there is a method that combines both, oversampling and undersampling. Let’s go!

Why is Imbalanced Classification?

The imbalanced classification is a condition where the class distribution of our training data has a severe slope. It is a problem because it can influence the performance of our model algorithms. Its results tend to lead by the majority class and completely ignore the minority class. The main reason why this is such a problem is that minority classes are often the classes we want to dive into the most.

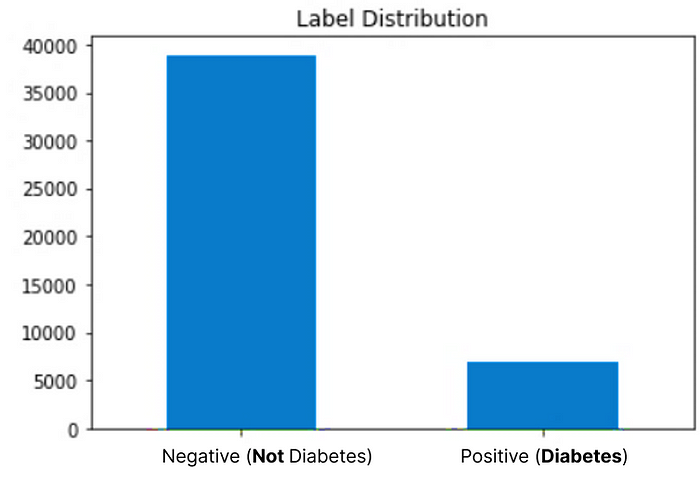

For example, when we build a classifier to classify a person at risk of diabetes or not, the real dataset tends to have more data on someone not at risk of diabetes than at risk of diabetes — it would be very worrying if the number of people at risk of diabetes was the same as someone who was not at risk of diabetes.

How to Handle It?

An approach to handling the classification imbalanced problem is randomly resampling the training dataset. There are two main techniques to perform randomly resampling:

Oversampling by duplicating examples from the minority classes. Several oversampling methods are

- Random Over Sampling (ROS) duplicates the minority class data by repeating the original samples until the class distribution is balanced.

- Synthetic Minority Oversampling Technique (SMOTE) duplicates the minority class data by generating artificial samples based on interpolating between original data of the minority class close to each other.

- Adaptive Synthetic (ADASYN) learns to generate some synthetic data samples for the minority classes based on dynamic adjustment of weights and adaptive learning of data distributions to cut down the bias.

Undersampling by deleting examples from the majority classes. Several oversampling methods are

- Random Under Sampling (RUS) removes the majority class samples to get balanced data.

- Tomek Links detects pairs of nearest neighbors that have different classes to define the boundary between classes

- Edited Nearest Neighbours (ENN) select some samples to be removed to be balanced with the minority class

Those techniques have pros and cons. Oversampling can lead to model overfitting because this technique duplicates minority instances, while undersampling removes the majority class instance that is sometimes important.

Therefore, let me introduce to you all combined both random oversampling and undersampling. Interesting results may be achieved~

Combining Random Oversampling and Undersampling

We can slightly oversample the minority class, which increases the bias in the minority class sample, while we also slightly undersample the majority class to reduce bias in the majority class sample.

By leveraging an imbalanced-learn framework, we are able to use the sampling_strategy attribute in our oversampling and undersampling techniques. See this article for more detail!

over = RandomOverSampler(sampling_strategy=0.5)

under = RandomUnderSampler(sampling_strategy=0.8)

X_over, y_over = over.fit_resample(X, y)

print(f"Oversampled: {Counter(y_over)}")Oversampled: Counter({0: 9844, 1: 4922})X_combined_sampling, y_combined_sampling = under.fit_resample(X_over, y_over)

print(f"Combined Random Sampling: {Counter(y_combined_sampling)}")Combined Random Sampling: Counter({0: 6152, 1: 4922})

Instead of doing the combination manually, we can now use a predefined Combination of Resampling Methods. There are combinations of oversampling and undersampling methods that have proven effective and together may be considered resampling techniques, SMOTE with TomekLinks undersampling and SMOTE with Edited Nearest Neighbors undersampling.

SMOTE-Tomek

Combination of SMOTE and Tomek Links Undersampling

- SMOTE duplicates the minority class data by generating artificial samples based on interpolating between original data of the minority class close to each other.

- Tomek Links detects pairs of nearest neighbors that have different classes to define the boundary between classes. Tomek will increase the separation between classes.

Python Implementation: imblearn library

R Implementaion: unbalanced library

Specifically, first, the SMOTE method is applied to oversample the minority class to a balanced distribution. Tomek links to the over-sampled training set as a data cleaning method. Thus, instead of removing only the majority class examples that form Tomek links, examples from both classes are removed

In this work, only majority class examples that participate in a Tomek link were removed, since minority class examples were considered too rare to be discarded. […] In our work, as minority class examples were artificially created and the data sets are currently balanced, then both majority and minority class examples that form a Tomek link, are removed.

— Balancing Training Data for Automated Annotation of Keywords: a Case Study, 2003.

SMOTE-ENN

Combination of SMOTE and Edited Nearest Neighbors Undersampling

- SMOTE may be the most popular oversampling technique and can be combined with many different undersampling techniques.

- Edited Nearest Neighbours (ENN) select some samples to be removed to be balanced with the minority class. This rule involves using k=3 nearest neighbors to locate those examples in a dataset that are misclassified and that are then removed. It can be applied to all classes or just those examples in the majority class.

ENN is providing more in-depth cleaning because more aggressive at downsampling the majority class than Tomek. Because it removes any example whose class label differs from the class of at least two of its three nearest neighbors.

… ENN is used to remove examples from both classes. Thus, any example that is misclassified by its three nearest neighbors is removed from the training set.

— A Study of the Behavior of Several Methods for Balancing Machine Learning Training Data, 2004.

You can deep dive into the use of this method here.